Artificial Intelligence (AI) is reshaping industries worldwide. Amidst the excitement over AI’s transformative power, there looms a pertinent concern: its impact on energy consumption. While data centers currently constitute a small percentage of US electricity usage, utility companies are already preparing for demand to continue to grow. But there is an offset. Even amidst AI’s seemingly boundless potential energy consumption, the technology has become more efficient every generation and there is potential for further large improvements.

Data centers consume a small, but growing, percentage of total electricity in the US. Statistics from the US Energy Information Administration (EIA) show that ‘computers and related equipment’ accounted for 2.3% of total electricity demand in 2022. Goldman Sachs estimated that usage grew closer to 3.0% in 2023 spurred by the proliferation of AI training. This roughly 30% growth of electricity consumption by computer equipment in 2023 is eye catching, and the trajectory of growth is causing utility companies to reassess their capacity plans.

Looking forward, utility companies are preparing for significant expansion in electricity supply. While headline-grabbing announcements hint at exponential growth, they often distort the underlying realities. For instance, chairman and CEO of Berkshire Hathaway Energy Greg Abel, at the 2024 Berkshire Hathaway Annual Meeting noted that MidAmerican Energy expects to double capacity by 2030. Similarly, NV Energy anticipates supplying over 4 gigawatts (GW) of data center electricity by 2030 to fulfill contracts it recently signed with Apple and Microsoft, among other West Coast technology companies. That’s almost three times larger than its current capacity of approximately 1.5GW.

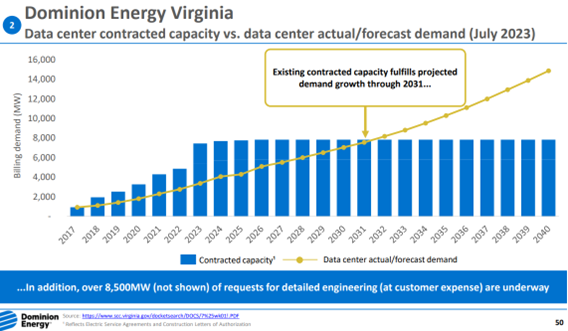

However, it’s important to note that these local investments aren’t necessarily in response to grid-stressing growth, but rather about strategic partnerships and advanced planning as data centers expand across the nation. Capacity expansion projects on this scale have already been executed by Dominion Energy, a major utility in the eastern US. The company currently supplies an estimated 3.4GW of electricity to the world’s largest data center cluster in Northern Virginia. They have plans to grow capacity up to nearly 8.0GW by 2025. This expansion would fulfill demand needs out to 2031, per company estimates, and alleviate potential stresses for years.

Slide courtesy of Dominion Energy Investor Relations

MidAmerican Energy’s plans to provide roughly 0.5 GW, and NV Energy’s plans to become the provider of choice on the West Coast with 4 GW, both seem reasonable in the context of the industry. These companies also expect to harvest more solar and wind resources, given their geographies, to help lower the cost of energy and reduce the burden on the power grid. In short, grid operators are already planning for large expansions, and they can look to Dominion’s play book to further raise the odds of success.

Offsetting concerns about escalating energy consumption are the generational efficiency gains seen in Nvidia’s AI chips. The company has a history of innovative designs that improve energy efficiency while still increasing AI-performance. Their latest generation is no exception. Nvidia’s newest AI chip, known as Blackwell, is estimated to perform roughly 2.5x better than the current Hopper chips on AI-focused tasks, per Nvidia estimates. And these new systems are expected to consume about 1,000 watts, or roughly 40% more than Hopper’s current 700 watts. This means that the time and energy needed to train AI applications may become about 80% more energy efficient. It’s a remarkable gain considering the roughly 18 months between system debut dates and underscores the potential for continued progress in semiconductor efficiency.

Stay on Top of Market Trends

The Carson Investment Research newsletter offers up-to-date market news, analysis and insights. Subscribe today!

"*" indicates required fields

The rise of AI has prompted an expansion in electricity capacity. Estimates show that data centers could consume 8% of all electricity by 2030, up from today’s roughly 3% share. It’s a testament to how impactful this technology may become, but also poses challenges to utility companies. Proactive measures, such as advanced planning alongside customers and building excess capacity, have helped ease potential stresses on the power grid. And further technological innovations in semiconductor design hold a promise of continued efficiency gains in the future. There are plenty of pathways ahead to ensure both AI and our everyday lives remain powered up.

02267086-0624-A